Best practices for quality workflow

You can build your quality workflow in many different ways. Here are the solutions that we recommend if you want best possible results:

- Use continuous feedback to collaborate with your team

- Perform random and targeted reviews

- Add quality metrics

- Set up programmatic QA

Using continuous feedback to collaborate with your team

To help labelers and reviewers meet the labeling requirements for the project, you can set labeling instructions. Your instructions can also serve as reference, if questions arise.

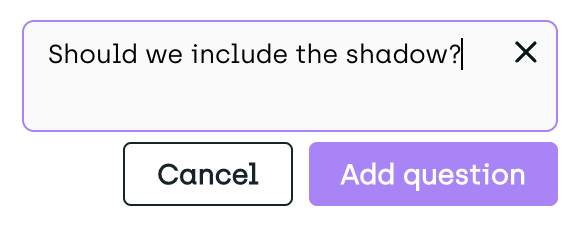

Questions from labelers

In case of doubt or lack of understanding, labelers can ask questions through the issues and questions panel. Reviewers and managers then answer those questions.

Labeler asks a question

Issues raised by reviewers and managers

Reviewers and managers can create issues on annotated assets and then send the assets back to the same (or different) labeler for correction.

Reviewer adds an issue

The number of open issues is shown on the issue button and is also available from the Analytics page.

Issues & Questions button

Performing random and targeted reviews

Perform focused reviews in the Explore view

Use the Explore view and available filters to focus your review on the most relevant assets, classes and labelers. For instance: show only labels generated today to run a regular, daily check, focus only on new labelers to check their work more closely focus on complex categories with high error probability.

Use Explore filters for a more focused review

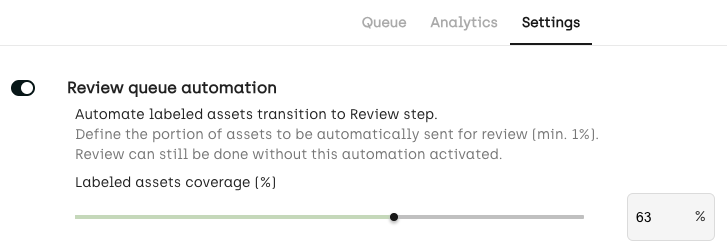

Automated review

Activate Review queue automation to make the Kili app randomly pick assets for review. Review queue automation eliminates human bias when assigning assets to be reviewed.

Activate review queue automation to make the Kili app randomly pick assets for review

Refer to this short video for information on how to handle the Explore view and the automated review queue:

To check the review score per labeler, or open the Analytics page and then go to the Quality Insights tab.

For information on how the review score is calculated, refer to Calculation rules for quality metrics.

For more information on the review process, refer to Reviewing labeled assets.

Adding quality metrics

Consensus

Consensus is the perfect choice if you want to evaluate simple classification tasks.

In the project quality settings, you can set the percentage of assets covered by consensus and the number of labelers per consensus.

Consensus results can be checked in the Explore view and in the Analytics page of your project.

Quality insights based on consensus

Honeypot

Use Honeypot to measure the performance of a new team working on your project. To do that, set some of your annotated assets as honeypots (ground truth).

You can checked the honeypot scores in the assets Queue page, the Explore view and in the Analytics page of your project.

Setting up programmatic QA

You can simplify and boost your QA process by using a QA bot. This way you can save your labelers and reviewers some work. To illustrate how automated QA works, here are example videos:

For more information, refer to our QA bot use case.

Learn more

You can access quality KPIs at two levels:

- Asset level, from the table view in the Queue page.

- Labeler level, from the Analytics page.

Updated 3 months ago